or, Why I'm cancelling my Spotify Premium subscription

Not entirely sure when I started using Spotify but it was probably late 2008 / early 2009 and I've found it to be a revelation of music discovery. I've spent hours just clicking from one artist to another, exploring back catalogues and having a serious listen to full albums in a way that would be quite difficult without already having bought the album or "obtained" it from P2P. Previously, using a combination of Last.fm and Myspace you could get quite close but the Spotify desktop app made the whole experience so much more seamless and enjoyable with full, consistent quality tracks.

I've been a Premium subscriber since 1 Aug 2009 with several factors leading to my decision to pay up. The first being high-bitrate uninterrupted audio; having some decent audio kit at home I wanted to make the most of it. Second was the Spotify for Android app I could use on my HTC Hero which is hands down the most convenient means of getting music on a mobile device. Put tracks in a playlist in the desktop app and they magically appear on the device – brilliant.

So, why am I quitting?

1. Cost

To date that's £169.83 in subscription fees - £9.99 a month for 17 months. I tend to buy CDs for £5 off Amazon so that equates to about 33 CD albums or about 2 albums a month. I’ve listened to a lot more albums than that during the time but I doubt that there would have been more than 33 that I would have considered buying a CD copy of. I’ve never paid for an MP3, I refuse to pay the same price as a CD for a lossy version but I paid for Spotify as the service does offer significantly more especially when you use the mobile apps. I’m just not sure it’s worth £9.99 a month.

2. Quality

Spotify Premium ups the track bitrate from 160kbps to 320kbps. At least that’s the idea, in practice it seems large portions of their library are only available in the lower quality and I doubt that more than 10% of the tracks I’ve listened to recently have been high bitrate. There’s also no visibility on "high quality" tracks in the app so I’m seriously sceptical about whether I’m getting the high-bitrates I’m paying for. The quality is certainly still miles off CD audio and having made a return to CDs recently it’s very noticeable that I’m missing out on audio clarity and have been making do with poor quality audio whilst also paying for the privilege.

3. Nothing to show for it

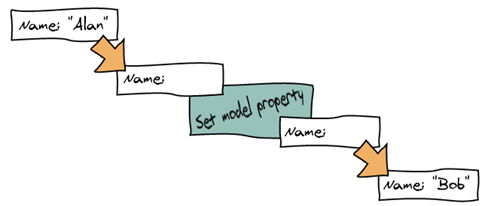

It’s a bitter pill to swallow but worst of all is the fact that after all the cost I’ve just been renting the music. I don’t get to keep the OGG tracks, I don’t own any of it and, when I cancel, the app on my phone will just stop working.

What service would I be happy with?

I’ve been wondering about the kind of service I’d like to see and that I’d be happy to pay for. Unlimited ad-supported listening of any tracks for discovering new music would be fine. I’d like to be able to buy albums, download them in full CD quality and stream them uninterrupted (no ads) in a reasonable bitrate to other computers and mobile devices. I’d also like to be able to register CDs I own with the service so those tracks are also available wherever I am.

The roll-your-own solution might be buying CDs, ripping them and paying $9.99 for at 50GB Dropbox to sync up my machines. Apparently the Dropbox for Android app has the ability to stream music and movies straight to the device so maybe that’s an option worth considering.

Lossless

In this day and age of high-def video, broadband internet and huge hard disks I don’t want to pay for, and there is no necessity for, low bitrate music. It’s rather interesting that the medium with the highest audio quality most widely available is Blu-ray disc in the form of Dolby TrueHD and DTS-HD. With video the soundtrack is more of a supporting role so lossy compression can be forgiven to some extent but with music the audio is the main event, it should be CD quality at least. MP3 was great for portability but it has a lot to answer for in terms of killing our appreciation of high quality audio and therefore the market’s desire to provide us with (and push) a high-definition medium solely for audio.

I currently have a

I currently have a